Empirical Comparison of Two Algorithms for Minimum Distance Between Elements

This report outlines the steps taken to analyse and compare two different algorithms for finding the minimum distance between two elements in an array. For each of the algorithms being analysed, a basic operation and problem size were chosen before rigorously testing each of them under identical conditions so as to obtain comparable results.

1.1. Algorithm 1: MinDistance

Each element of A is checked against itself and the other elements of the array. Although there is a line of code later in the algorithm which disqualifies any instance where an element is the same as the one being checked (if i ≠ j), A[i] will still be checked against A[j] in order to make this qualification, so all possible combinations of A[] against A[] will be checked. This means that the number of basic operations is always n squared, where n is the array length. So using the above hypothetical array of length 4 as an input for the algorithm, the number of operations will always equal 16, or 4 squared.

The table above shows all the possible combinations that can occur when comparing each element in A against each other. It shows which combinations of A[] against A[] will be computed. MinDistance checks every possible combination, and only later filters out unnecessary ones. This is displayed alongside a truth table which shows which combinations of elements of the hypothetical array, A[a,b,c,d], are computed, and which are excluded before comparing the value of each element. If the absolute value of A[i] – A[j] is smaller than dmin, which is initially set to Infinity, then dmin is replaced with |A[i] – A[j]|. Once all elements have been compared, dmin, the smallest distance between two elements of the array, is returned.

1.1. Algorithm 2: MinDistance2

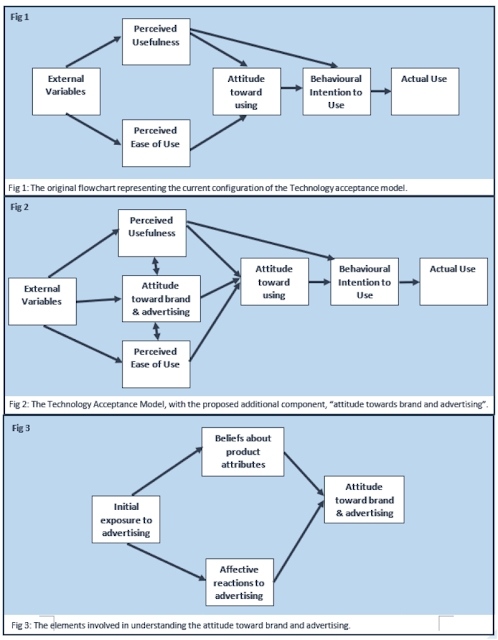

The second algorithm, MinDistance2 (Appendix B), is almost indistinguishable from MinDistance, aside from a few differences which can be visualised in the following visual aide:

The main concerns of these tests were to count the number of basic operations required to complete each algorithm, and compare their execution times several different array sizes. Several graphs were produced visualising the very clear difference between the two algorithms.

A = [a, b, c, d]

a, b, c, d = random integegrs

n = Length of A (in this case 4)

i = the position of the current element of A[] being processed

j = the position of the element of A[] being compared with i

1. Description of the Algorithms

Both of the algorithms are very similar in that they both require an array of numbers as input, iterate through the input array in some manner, and qualify each inspected element before returning an output. The input array values can be integers, floats, or doubles, it does not matter, as long as all elements within the input array are of the same type, or can be converted to an equivalent type. For simplicity, all elements of the input array are assumed to be integers ranging between 0 and 100. From here, a hypothetical array will be used to illustrate the processes of the two algorithms, and some further definitions are shown:A = [a, b, c, d]

a, b, c, d = random integegrs

n = Length of A (in this case 4)

i = the position of the current element of A[] being processed

j = the position of the element of A[] being compared with i

1.1. Algorithm 1: MinDistance

Each element of A is checked against itself and the other elements of the array. Although there is a line of code later in the algorithm which disqualifies any instance where an element is the same as the one being checked (if i ≠ j), A[i] will still be checked against A[j] in order to make this qualification, so all possible combinations of A[] against A[] will be checked. This means that the number of basic operations is always n squared, where n is the array length. So using the above hypothetical array of length 4 as an input for the algorithm, the number of operations will always equal 16, or 4 squared.

Ops

|

A[i]

|

A[j]

|

Checked

|

i ≠ j

|

1

|

a

|

a

|

T

|

F

|

2

|

a

|

b

|

T

|

T

|

3

|

a

|

c

|

T

|

T

|

4

|

a

|

d

|

T

|

T

|

5

|

b

|

a

|

T

|

T

|

6

|

b

|

b

|

T

|

F

|

7

|

b

|

c

|

T

|

T

|

8

|

b

|

d

|

T

|

T

|

9

|

c

|

a

|

T

|

T

|

10

|

c

|

b

|

T

|

T

|

11

|

c

|

c

|

T

|

F

|

12

|

c

|

d

|

T

|

T

|

13

|

d

|

a

|

T

|

T

|

14

|

d

|

b

|

T

|

T

|

15

|

d

|

c

|

T

|

T

|

16

|

d

|

d

|

T

|

F

|

The table above shows all the possible combinations that can occur when comparing each element in A against each other. It shows which combinations of A[] against A[] will be computed. MinDistance checks every possible combination, and only later filters out unnecessary ones. This is displayed alongside a truth table which shows which combinations of elements of the hypothetical array, A[a,b,c,d], are computed, and which are excluded before comparing the value of each element. If the absolute value of A[i] – A[j] is smaller than dmin, which is initially set to Infinity, then dmin is replaced with |A[i] – A[j]|. Once all elements have been compared, dmin, the smallest distance between two elements of the array, is returned.

1.1. Algorithm 2: MinDistance2

The second algorithm, MinDistance2 (Appendix B), is almost indistinguishable from MinDistance, aside from a few differences which can be visualised in the following visual aide:

Ops

|

A[i]

|

A[j]

|

Checked

|

a

|

a

|

F

|

|

1

|

a

|

b

|

T

|

2

|

a

|

c

|

T

|

3

|

a

|

d

|

T

|

b

|

a

|

F

|

|

b

|

b

|

F

|

|

4

|

b

|

c

|

T

|

5

|

b

|

d

|

T

|

c

|

a

|

F

|

|

c

|

b

|

F

|

|

c

|

c

|

F

|

|

6

|

c

|

d

|

T

|

d

|

a

|

F

|

|

d

|

b

|

F

|

|

d

|

c

|

F

|

|

d

|

d

|

F

|

The image above is more of an abstract example of how a hypothetical array of 4 values is used by MinDistance2.

As can be seen it begins by comparing the values of the array against themselves, but instead of looping from 0 to n-1, the last element of the array is left out, and the loop only runs from i=0 to i=n-2. The inner loop within this begins comparing the values in the ith position against each element in the list, but instead of starting from the first element in the list, it begins at the 2nd element in the list, which avoids any items being checked against themselves. Together these two constraints reduce the number of elements in the array to be compared, in effect filtering out all of the repeated comparisons, and comparisons where both elements are the same.

From this point, much like the first algorithm, MinDistance2 also checks the two elements in question to see if |A[i] – A[j]| is smaller than dmin, and by the end of the algorithm the smallest distance between two elements in the array is identified and returned.

As can be seen it begins by comparing the values of the array against themselves, but instead of looping from 0 to n-1, the last element of the array is left out, and the loop only runs from i=0 to i=n-2. The inner loop within this begins comparing the values in the ith position against each element in the list, but instead of starting from the first element in the list, it begins at the 2nd element in the list, which avoids any items being checked against themselves. Together these two constraints reduce the number of elements in the array to be compared, in effect filtering out all of the repeated comparisons, and comparisons where both elements are the same.

From this point, much like the first algorithm, MinDistance2 also checks the two elements in question to see if |A[i] – A[j]| is smaller than dmin, and by the end of the algorithm the smallest distance between two elements in the array is identified and returned.

2. Theoretical Analysis of Algorithm

2.1. Choice of Basic Operation

In order to obtain useful information, it is necessary that the choice of basic operation be the same for both algorithms during the tests. It can be seen that the structure of both of the algorithms are almost identical except for the number of times each for loop will iterate. Both of the algorithms contain all of their necessary processes within the nested for loop.

The inner for loop contains the majority of the changes to the original algorithm and is repeated the most number of times, so it will be the Choice of basic operation. Each algorithm has its basic operation outlined in red in appendix A & appendix B.

2.2. Choice of Input size

The inner for loop contains the majority of the changes to the original algorithm and is repeated the most number of times, so it will be the Choice of basic operation. Each algorithm has its basic operation outlined in red in appendix A & appendix B.

2.2. Choice of Input size

To begin with, each element of the array was populated with a random number in the range of 1 to 100 with the use of a pseudorandom number generator and this fact stayed constant throughout most of the experiments, aside from some of the initial functional testing to see the effects of larger element sizes.

Array length for the tests were a little bit trickier to choose, and were chosen as the tests were carried out. Initially, array length was set to two and was incremented by 1 for each subsequent test, but very quickly it was discovered that the trend in the data was much more gradual and required larger steps between data points so as to not waste time.

The next attempt involved setting the array size to two and applying much larger incrementations; this time doubling the length of n for each test. This time the tests were much quicker and a trend was much more clearly visible in the output data. But in this case there were only about 16 data points available after doubling the array length to about 65,000. The solution to getting more datapoints along the line was simply to multiply n by a smaller number than 2, but a higher number than 1. To obtain the graphs below, for each test, each time a new array was created, it’s length would be %5 larger than the previous array.

Originally Microsoft Excel was planned to be used for graphic visualisation of the data but large datasets were causing memory problems and Python’s pyplot library was chosen for its simplicity and flexibility for use with large datasets.

3.1. Functional Testing

Array length for the tests were a little bit trickier to choose, and were chosen as the tests were carried out. Initially, array length was set to two and was incremented by 1 for each subsequent test, but very quickly it was discovered that the trend in the data was much more gradual and required larger steps between data points so as to not waste time.

The next attempt involved setting the array size to two and applying much larger incrementations; this time doubling the length of n for each test. This time the tests were much quicker and a trend was much more clearly visible in the output data. But in this case there were only about 16 data points available after doubling the array length to about 65,000. The solution to getting more datapoints along the line was simply to multiply n by a smaller number than 2, but a higher number than 1. To obtain the graphs below, for each test, each time a new array was created, it’s length would be %5 larger than the previous array.

3. Methodology, Tools, and Techniques

To test the algorithm, a 64-bit Windows Intel i5 @ 3.50GHz was used. The general-purpose programming language, C++, was used to calculate the time complexity and running time of both algorithms. This was implemented in one file. With the multiplatform IDE Codeblocks, Several C++ console applications were created for different purposes during this analysis, which are detailed below in section 3.1. Functional Testing.Originally Microsoft Excel was planned to be used for graphic visualisation of the data but large datasets were causing memory problems and Python’s pyplot library was chosen for its simplicity and flexibility for use with large datasets.

3.1. Functional Testing

Firstly, the algorithm itself was produced in c++ and, several useful functions and counters were built around it for initial functional testing. This started off by simply outputting dmin to the console for a quick analysis and trying again with different random values in the input array, but as more functions were built around the algorithm, eventually useful data was sent to a text file in comma separated values to be graphed in python.

Appendix C shows the console output from functional tests in CodeBlocks IDE. Several of these kinds of tests were performed with different array sizes to confirm both algorithms give equivalent outputs when given equivalent outputs.

Both algorithms needed to be treated equally in order to obtain useful results, so the array was created with dynamic memory that only was deleted after both algorithms had used it. This also means that the testing environment saved memory by avoiding making an array for each algorithm.

After initial testing this the cpp file was duplicated to make a second file called timer 2.cpp. This was a much more stripped down version of the previous cpp file and but contained loops for automated testing.

Appendix C shows the console output from functional tests in CodeBlocks IDE. Several of these kinds of tests were performed with different array sizes to confirm both algorithms give equivalent outputs when given equivalent outputs.

Both algorithms needed to be treated equally in order to obtain useful results, so the array was created with dynamic memory that only was deleted after both algorithms had used it. This also means that the testing environment saved memory by avoiding making an array for each algorithm.

After initial testing this the cpp file was duplicated to make a second file called timer 2.cpp. This was a much more stripped down version of the previous cpp file and but contained loops for automated testing.

4. Experimental Results

4.1. Average-Case Number of Basic Operations

All cases with identical input size will implement an identical number of basic operations. Therefore, the worst case scenario is one where the length of the array is so high that it takes too long to compute, and the best case scenario is when there is the minimum number of elements in A and as such takes the minimum amount of time to compute. The number of basic operations which are to be implemented is directly proportional to the input size, and therefore the average-case number of basic operations can simply be considered the case in which the computation is completed in the most efficient time for the problem.

Regardless, the average, worst, and best case number of basic operations are all identical. Figure A demonstrates this by testing array lengths of different sizes, multiple times each and graphing the results to see that there is only one point per algorithm on the graph for each array length.

Regardless, the average, worst, and best case number of basic operations are all identical. Figure A demonstrates this by testing array lengths of different sizes, multiple times each and graphing the results to see that there is only one point per algorithm on the graph for each array length.

4.2. Average-Case Execution time

Figure B represents the time taken for each algorithm to compute inputs of various array lengths. As can be seen there is a very clear trend in both sets of data that show that the length of input array and number of operations are highly correlated as there is only a small amount of variance in the time taken for each array size and any time variance (elongated dots on the graph) can be seen on both curves. This indicates that this testing method is not likely to favour one algorithm over the other.

5. Conclusion

Although both algorithms present very similar curves in both analyses, MinDistance2 is displaying both lower execution times and a lower number of basic operations when compared to the original algorithm. Just by a brief summary of the data being visually displayed on a graph, the theory that MinDistance2 is more efficient than MinDistance on average can be instantly accepted.

The differences between the two algorithms may not have been very noticeable for the first 10000 or so array lengths, but by around 120,000 array length, there is almost a 21 second difference in running time between the two algorithms., and the number is set to expand until an error is thrown violating the max length of an array or element. It is therefore clear that while both algorithms are of the same efficiency class, MinDistance displays characteristics that are to be expected if it has a larger constant multiplier than MinDistance2.

Tables and Figures

Appendix

|

Appendix A:

The MinDistance algorithm, analysed

in the Codeblocks IDE. Outlined in

red is the choice of basic operation.

|

|

Appendix B:

The MinDistance2 algorithm, analysed

in the Codeblocks IDE. Outlined in

red is the choice of basic operation.

|

Comments

Post a Comment